The latest issue of Limn is on ‘The total archive‘:

Vast accumulations of data, documents, records, and samples saturate our world: bulk collection of phone calls by the NSA and GCHQ; Google, Amazon or Facebook’s ambitions to collect and store all data or know every preference of every individual; India’s monumental efforts to give everyone a number, and maybe an iris scan; hundreds of thousands of whole genome sequences; seed banks of all existing plants, and of course, the ancient and on-going ambitions to create universal libraries of books, or their surrogates.

Just what is the purpose of these optimistically total archives – beyond their own internal logic of completeness? Etymologically speaking, archives are related to government—the site of public records, the town hall, the records of the rulers (archons). Governing a collective—whether people in a territory, consumers of services or goods, or victims of an injustice—requires keeping and consulting records of all kinds; but this practice itself can also generate new forms of governing, and new kinds of collectives, by its very execution. Thinking about our contemporary obsession with vast accumulations through the figure of the archive poses questions concerning the relationships between three things: (1) the systematic accumulation of documents, records, samples or data; (2) a form of government and governing; and (3) a particular conception of a collectivity or collective kind. (1) What kinds of collectivities are formed by contemporary accumulations? What kind of government or management do they make possible? And who are the governors, particularly in contexts where those doing the accumulation are not agents of a traditional government?

This issue of Limn asks authors to consider the way the archive—as a figure for a particular mode of government—might shed light on the contemporary collections, indexes, databases, analytics, and surveillance, and the collectives implied or brought into being by them.

The issue includes an essay by Stephen J. Collier and Andrew Lakoff on the US Air Force’s Bombing Encyclopedia of the World. I’ve discussed the Encyclopedia in detail before, but they’ve found a source that expands that discussion, a series of lectures delivered in 1946-48 to the Air War College by Dr. James T. Lowe, the Director of Research for the Strategic Vulnerability Branch of the U.S. Army’s Air Intelligence Division. The Branch was established in 1945 and charged with conducting what Lowe described as a ‘pre-analysis of the vulnerability of the U.S.S.R. to strategic air attack and to carry that analysis to the point where the right bombs could be put on the right targets concomitant with the decision to wage the war without any intervening time period whatsoever.’

The project involved drawing together information from multiple sources, coding and geo-locating the nominated targets, and then automating the data-management system.

What interests the authors is the way in which this transformed what was called ‘strategic vulnerability analysis’: the data stream could be interrogated through different ‘runs’, isolating different systems, in order to identify the ‘key target system’:

‘… the data could be flexibly accessed: it would not be organized through a single, rigid system of classification, but could be queried through “runs” that would generate reports about potential target systems based on selected criteria such as industry and location. As Lowe explained, “[b]y punching these cards you can get a run of all fighter aircraft plants” near New York or Moscow. “Or you can punch the cards again and get a list of all the plants within a geographical area…. Pretty much any combination of industrial target information that is required can be obtained—and can be obtained without error” (Lowe 1946:13-14).’

Their central point is that the whole project was the fulcrum for a radical transformation of knowledge production:

‘The inventory assembled for the Encyclopedia was not a record of the past; rather, it was a catalog of the elements comprising a modern military-industrial economy. The analysis of strategic vulnerability did not calculate the regular occurrence of events and project the series of past events into the future, based on the assumption that the future would resemble the past. Rather, it examined interdependencies among these elements to generate a picture of vital material flows and it anticipated critical economic vulnerabilities by modeling the effects of a range of possible future contingencies. It generated a new kind of knowledge about collective existence as a collection of vital systems vulnerable to catastrophic disruption.’

And so, not surprisingly, the same analysis could be turned inwards – to detect and minimise sites of strategic vulnerability within the United States.

All of this intersects with the authors’ wider concerns about vital systems security: see in particular their ‘Vital Systems Security: Reflexive biopolitics and the government of emergency‘, in Theory, Culture and Society 32(2) (2015):19–51:

This article describes the historical emergence of vital systems security, analyzing it as a significant mutation in biopolitical modernity. The story begins in the early 20th century, when planners and policy-makers recognized the increasing dependence of collective life on interlinked systems such as transportation, electricity, and water. Over the following decades, new security mechanisms were invented to mitigate the vulnerability of these vital systems. While these techniques were initially developed as part of Cold War preparedness for nuclear war, they eventually migrated to domains beyond national security to address a range of anticipated emergencies, such as large-scale natural disasters, pandemic disease outbreaks, and disruptions of critical infrastructure. In these various contexts, vital systems security operates as a form of reflexive biopolitics, managing risks that have arisen as the result of modernization processes. This analysis sheds new light on current discussions of the government of emergency and ‘states of exception’. Vital systems security does not require recourse to extraordinary executive powers. Rather, as an anticipatory technology for mitigating vulnerabilities and closing gaps in preparedness, it provides a ready-to-hand toolkit for administering emergencies as a normal part of constitutional government.

It’s important to add two riders to the discussion of the Bombing Encyclopedia, both of which concern techno-politics rather than biopolitics. Although those responsible for targeting invariably represent it as a technical-analytical process – in fact, one of the most common elements in the moral economy of bombing is that it is ‘objective’, as I showed in my Tanner Lectures – it is always also intrinsically political; its instrumentality resides in its function as an irreducibly political technology.

As Stephen and Andrew make clear, the emphasis on key target systems emerged during the Combined Bomber Offensive against Germany in the Second World War, when it was the subject of heated debate. This went far beyond Arthur Harris‘s vituperative dismissals of Solly Zuckerman‘s arguments against area bombing in favour of economic targets (‘panacea targets’, Harris called them: see my discussion in ‘Doors into nowhere’: DOWNLOADS tab). You can get some sense of its wider dimensions from John Stubbington‘s intricate Kept in the Dark (2010), which not only provides a robust critique of the Ministry of Economic Warfare’s contributions to target selection but also claims that vital signals intelligence – including ULTRA decrypts – was withheld from Bomber Command. Administrative and bureaucratic rivalries within and between intelligence agencies did not end with the war, and you can find a suggestive discussion of the impact of this infighting on US targeting in Eric Schmidt‘s admirably clear (1993) account of the development of Targeting Organizations here.

As Stephen and Andrew make clear, the emphasis on key target systems emerged during the Combined Bomber Offensive against Germany in the Second World War, when it was the subject of heated debate. This went far beyond Arthur Harris‘s vituperative dismissals of Solly Zuckerman‘s arguments against area bombing in favour of economic targets (‘panacea targets’, Harris called them: see my discussion in ‘Doors into nowhere’: DOWNLOADS tab). You can get some sense of its wider dimensions from John Stubbington‘s intricate Kept in the Dark (2010), which not only provides a robust critique of the Ministry of Economic Warfare’s contributions to target selection but also claims that vital signals intelligence – including ULTRA decrypts – was withheld from Bomber Command. Administrative and bureaucratic rivalries within and between intelligence agencies did not end with the war, and you can find a suggestive discussion of the impact of this infighting on US targeting in Eric Schmidt‘s admirably clear (1993) account of the development of Targeting Organizations here.

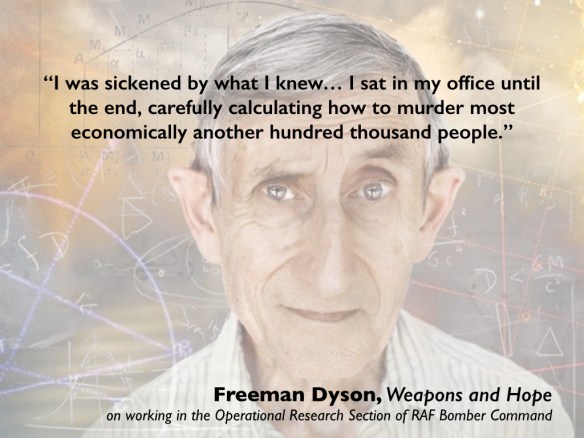

Any targeting process produces not only targets (it’s as well to remember that we don’t inhabit a world of targets: they have to be identified, nominated, activated – in a word, produced) but also political subjects who are interpellated through the positions they occupy within the kill-chain. After the Second World War, Freeman Dyson reflected on what he had done and, by implication, what it had done to him:

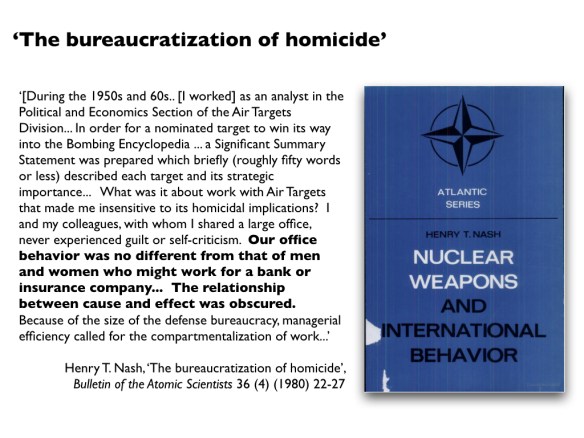

But data-management had been in its infancy. With the Bombing Encyclopedia, Lowe argued, ‘the new “machine methods” of information management made it possible “to operate with a small fraction of the number of people in the target business that would normally be required.”‘ But there were still very large numbers involved, and Henry Nash –who worked on the Bombing Encyclopedia – was even more blunt about what he called ‘the bureaucratization of homicide‘:

This puts a different gloss on that prescient remark of Michel Foucault‘s: ‘People know what they do; frequently they know why they do what they do; but what they don’t know is what what they do does.’

Nash began his essay with a quotation from a remarkable book by Richard J. Barnet, The roots of war (1972). Barnet said this (about the Vietnam War, but his point was a general one):

‘The essential characteristic of bureaucratic homicide is division of labor. In general, those who plan do not kill and those who kill do not plan. The scene is familiar. Men in blue, green and khaki tunics and others in three-button business suits sit in pastel offices and plan complex operations in which thousands of distant human beings will die. The men who planned the saturation bombings, free fire zones, defoliation, crop destruction, and assassination programs in the Vietnam War never personally killed anyone.

‘The bureaucratization of homicide is responsible for the routine character of modern war, the absence of passion and the efficiency of mass-produced death. Those who do the killing are following standing orders…

‘The complexity and vastness of modern bureaucratic government complicates the issue of personal responsibility. At every level of government the classic defense of the bureaucratic killer is available: “I was just doing my job!” The essence of bureaucratic government is emotional coolness, orderliness, implacable momentum, and a dedication to abstract principle. Each cog in the bureaucratic machine does what it is supposed to do.

‘The Green Machine, as the soldiers in Vietnam called the military establishment, kills cleanly, and usually at a distance. America’s highly developed technology makes it possible to increase the distance between killer and victim and hence to preserve the crucial psychological fiction that the objects of America’s lethal attention are less than human.’

Barnet was the co-founder of the Institute for Policy Studies; not surprisingly, he ended up on Richard Nixon‘s ‘enemies list‘ (another form of targeting).

I make these points because there has been an explosion – another avalanche – of important and interesting essays on databases and algorithms, and the part they play in the administration of military and paramilitary violence. I’m thinking of Susan Schuppli‘s splendid essay on ‘Deadly algorithms‘, for example, or the special issue of Society & Space on the politics of the list – see in particular the contributions by Marieke de Goede and Gavin Sullivan (‘The politics of security lists‘), Jutta Weber (‘On kill lists‘) and Fleur Johns (on the pairing of list and algorithm) – and collectively these have provided essential insights into what these standard operating procedures do. But I’d just add that they interpellate not only their victims but also their agents: these intelligence systems are no more ‘unmanned’ than the weapons systems that prosecute their targets. They too may be ‘remote’ (Barnet’s sharp point) and they certainly disperse responsibility, but the role of the political subjects they produce cannot be evaded. Automation and AI undoubtedly raise vital legal and ethical questions – these will become ever more urgent and are by no means confined to ‘system failure‘ – but we must not lose sight of the politics articulated through their activation. And neither should we confuse accountancy with accountability.

Pingback: The Bombing Encyclopedia and military interrogation | geographical imaginations

Pingback: Periscope | geographical imaginations

Pingback: Fragments | geographical imaginations

Pingback: Bombing Encyclopedia of the World | geographical imaginations

Pingback: Hidden in plane sight | geographical imaginations

Pingback: Intelligence designed | things I've read or intend to